Training Vision Models Without Real Data with Parallax

Dec 22, 2025

Training Vision Models Without Real Data with Parallax

The Parallax Worlds platform enables users to rapidly create photorealistic simulations of real production environments. While most engineers think of simulation for testing, photorealistic simulation can in fact be very promising for generating high quality training data at scale, particularly for computer vision models.

In this example, we show how a computer vision model was trained to detect a new part completely inside the Parallax simulator, with zero real world data.

Scene Setup

For this example, we set up a table with the SO-101 Robot Arm (Part of HuggingFace's LeRobot Suite) in our office on a regular desk. The goal of this exercise is to get a computer vision model — the YOLOv11 detector — to learn to identify a coffee pod it has never seen before in its training. We choose the YOLO model here instead of a foundation model (such as SAM) because we want to test models that can run on edge compute on robot in a live production facility.

Using the Parallax platform, we first set up the scene, the table, coffee pod and robot in simulation. Note, all of this was generated using just an iPhone Pro and the Parallax website.

Gathering Training Data

Once the simulation is setup, gathering training data inside Parallax is extremely straightforward. The robot's cameras are already calibrated to exactly match the real world camera. You can simple drive the robot around, or give it a trajectory to follow and it can record all the images the robot sees from its perspective, exactly in the production environment.

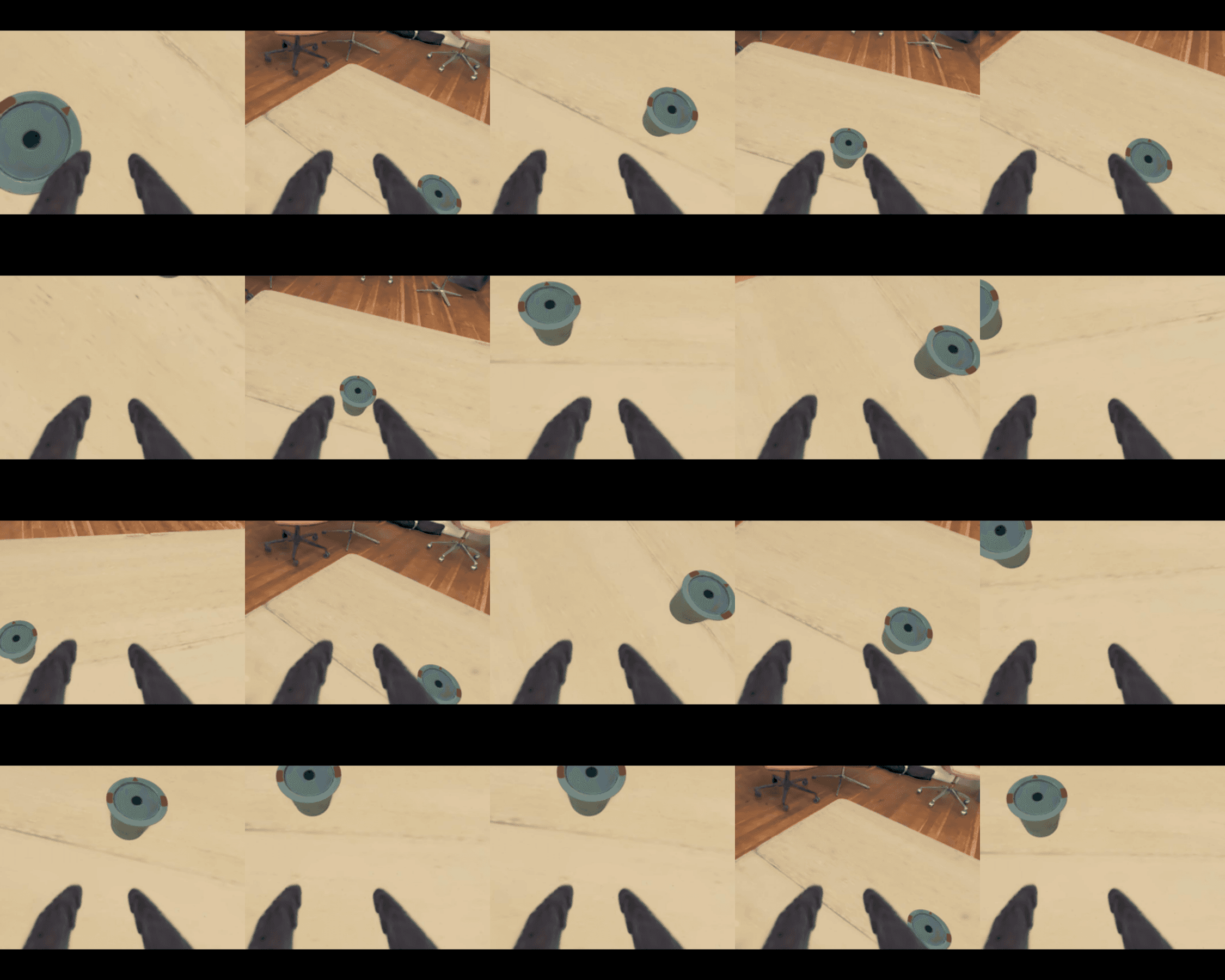

Sample of 20 training images generated from the Parallax simulator

Training the Object Detector

In this example, we will be training a YOLOv11 detector, which is an open-source computer vision model to identify the new part. We will first automatically generate ground truth labels using SAM3, which can segment videos using a text prompt, and then use the standard training code from the YOLO developers to train a bounding box detector using our simulation data.

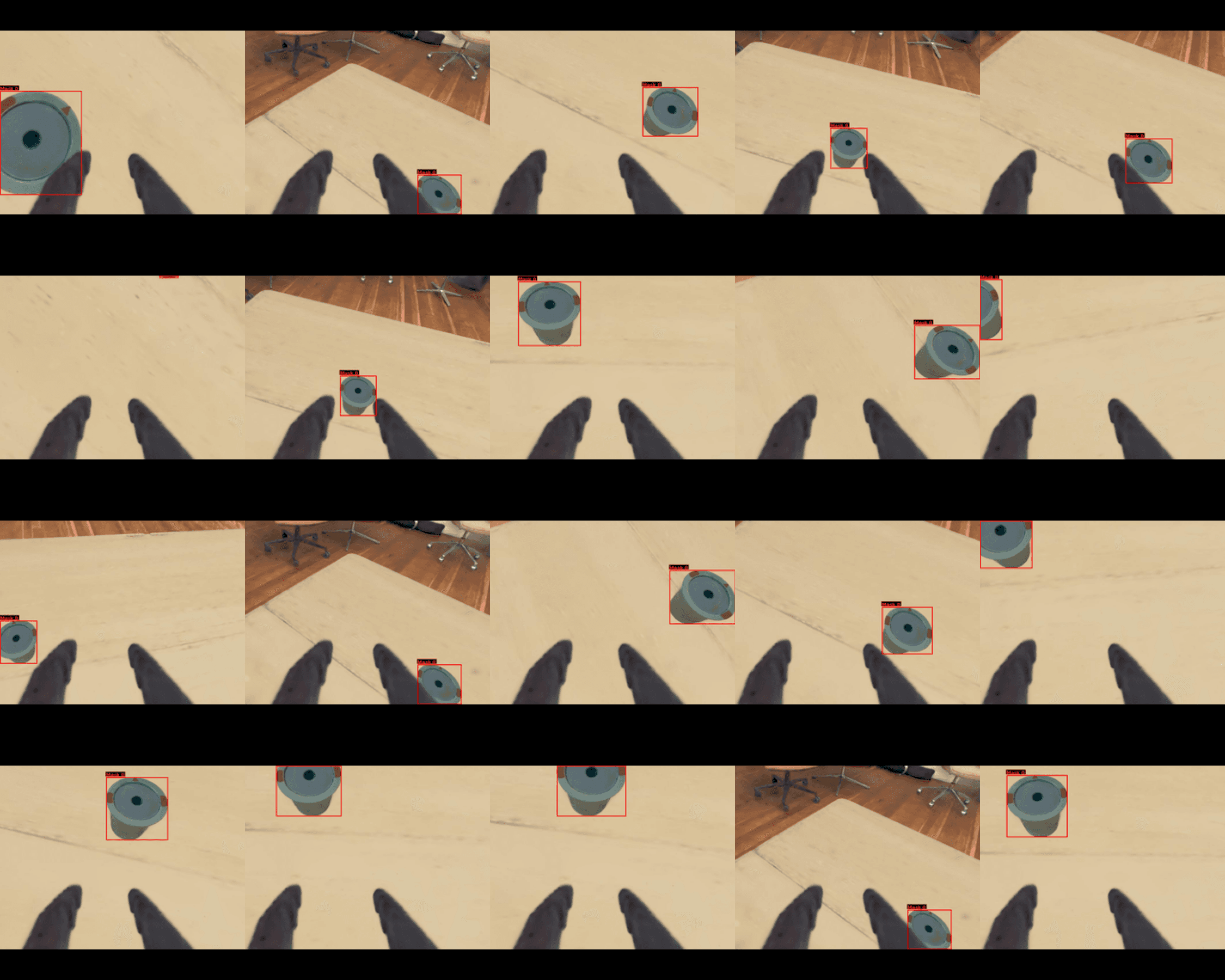

Sample of 20 training images with ground truth labels generated by SAM3 using the text prompt "Metal Disk"

Direct Transfer to the Real World

Now, without training the model on any real world data at all, we deploy the trained model on the real SO-101 arms in the real world setup. The model achieved an 89% mAP score (mean Average Precision) on a test dataset, and the results can qualitatively be seen below. You can also see the live inference speed of the system on a consumer GPU at 30 Hz.

We can see that not only is the model able to consistently detect the object, but it is robust against scene clutter, distractors, bright lights, reflections, and shadows.

Model robustness against background clutter, distractors, and lighting changes

Model robustness against relative camera position and gripper movements

This model was trained with just 400 images from simulation. By further scaling up the data collection, the performance of the YOLO model can be further improved to be deployed on a real world setup.